Can you make sense of it? If yes then great and if no, then keep reading!

Data is evolving at a staggering rate that is only going to exaggerate in volume further. With years of data automation and smart data processes in place; organizations are in dire need of a specialized data science workforce who can make sense of this large pool of data. understanding these big numbers is an art and requires strategic moves to accomplish a thriving career trajectory; if interested. If you think you can make a difference in the way data is perceived and understood, then the data science industry is for you.

Making these big data numbers comprehensible, is the right time to get a data science career rolling. Believing McKinsey, by 2025, the demand for data transformation will be extremely high as most organizations are expected to become data-driven with nearly every aspect of work being optimized by data, requiring robust data management practices, advanced analytics, and strong data-driven culture to unlock its full potential; essentially making data transformation a critical factor for competitive advantage. Understanding which form the data is in and what is expected results; it is evident that a skilled data scientist is critical for a successful data transformation process. Accompany us as we dig deeper into the realms of data transformation and how is it different from data visualization, and explore many other facets of the domain.

Data Transformation- An Understanding

In layman's terms, data transformation is the process of converting data from one format to another; involving converting raw data into a cleansed validated, and ready-to-use format. It involves structuring data into a usable format to assist in quality decision-making and amplify business growth. Quite simple, isn’t it?

Data Transformation- A Data Scientist’s Sword or A Wasted Effort

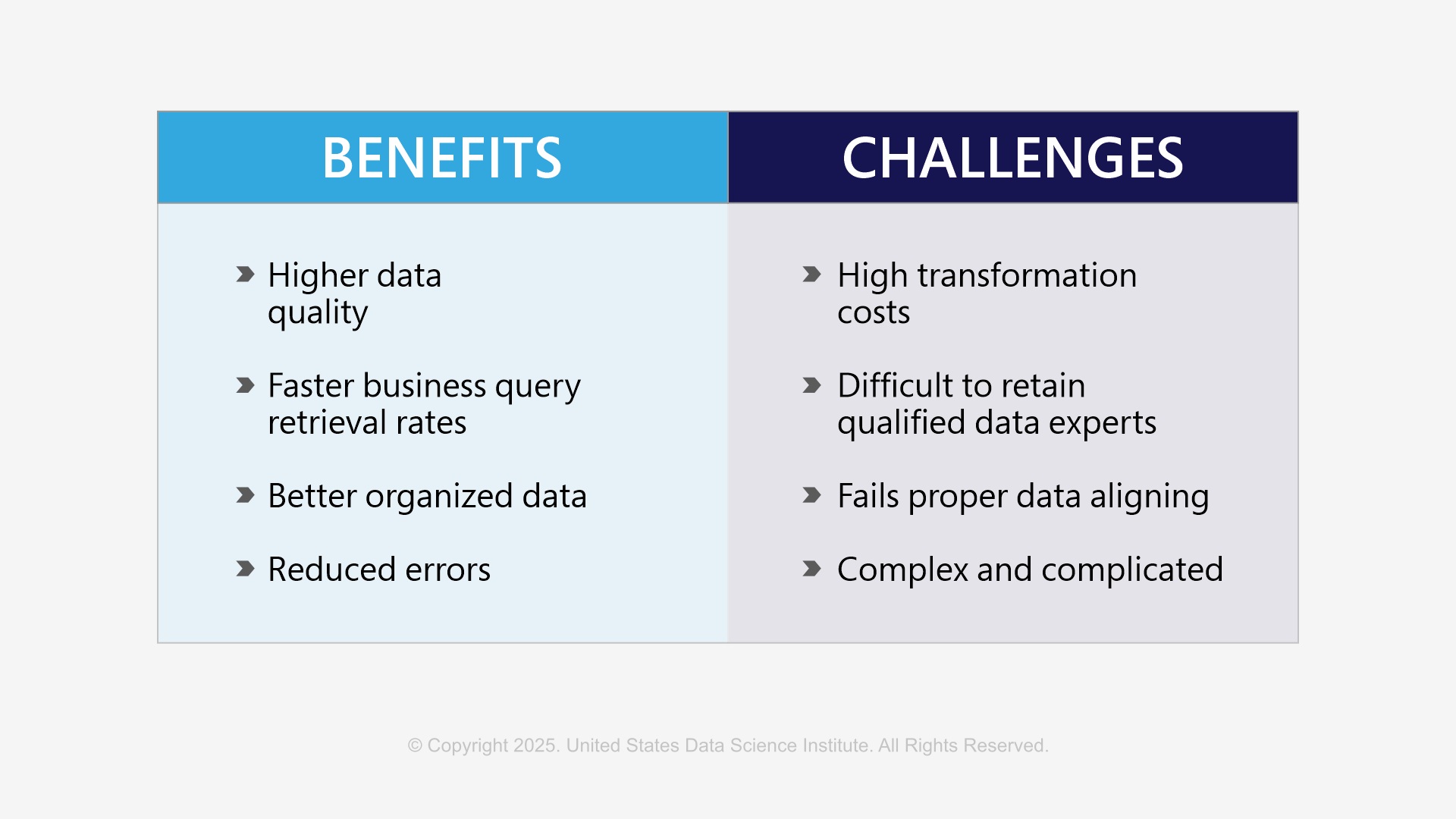

Data transformation is indeed a blessing and a robust armor for qualified data science professionals working hard to streamline business processes. Data transformation skills help accelerate business growth while facilitating informed decision-making. This helps stakeholders and data science experts to deduce what lies ahead and forecast the trends while deploying impressive data visualization and machine learning models. This empowers data scientists to deduce the true meaning of big numbers and can bring in a lot of positive change in the way data is perceived.

What is the Big Deal?

Data transformation addresses these common issues by refining and standardizing data throughout business processes. As businesses evolve, their task pipelines create inconsistencies that lead to flawed or messy data. data transformation acts as a critical intermediary in enabling information that is reliable, clean, and accurate.

The Procedure

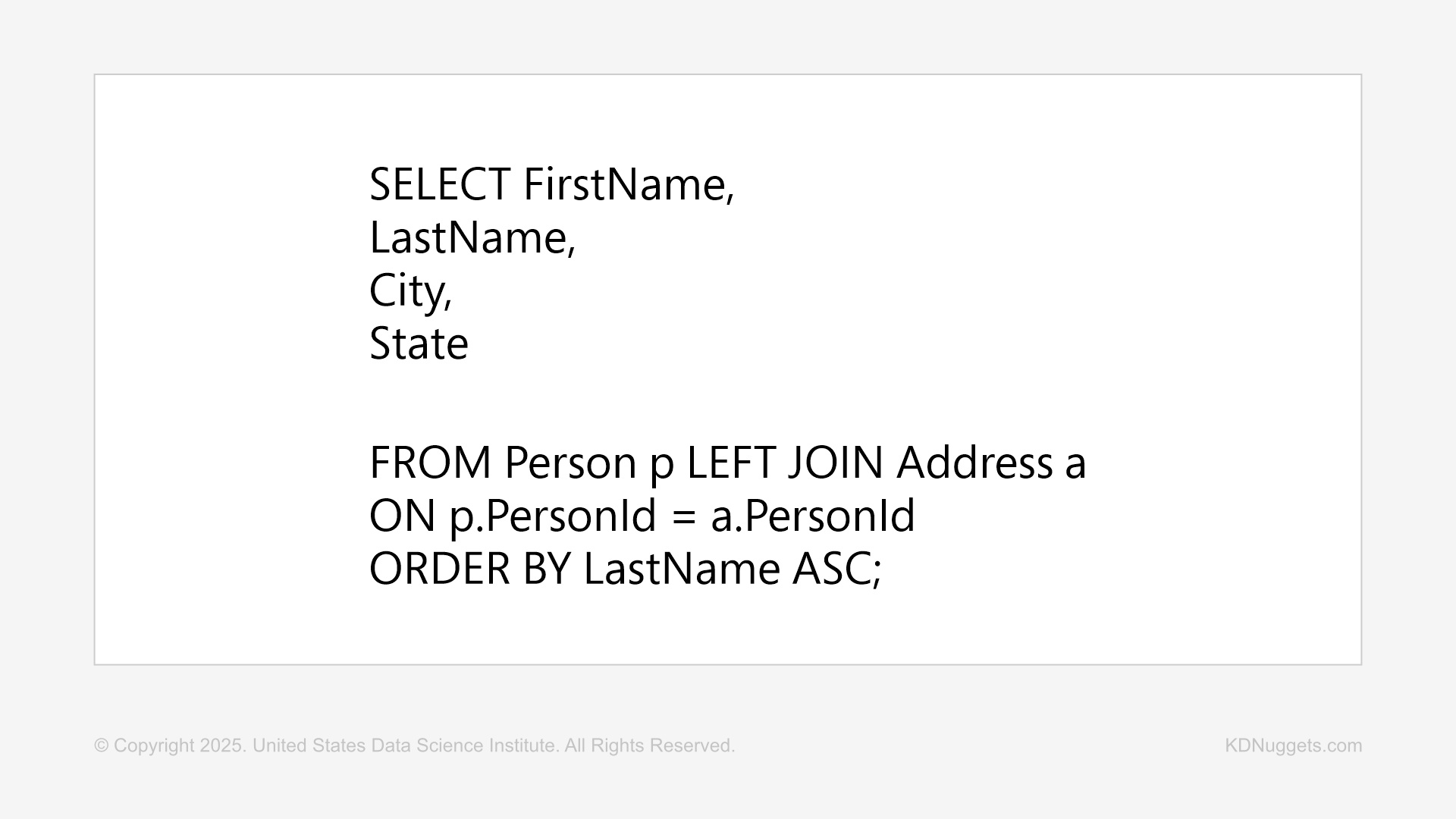

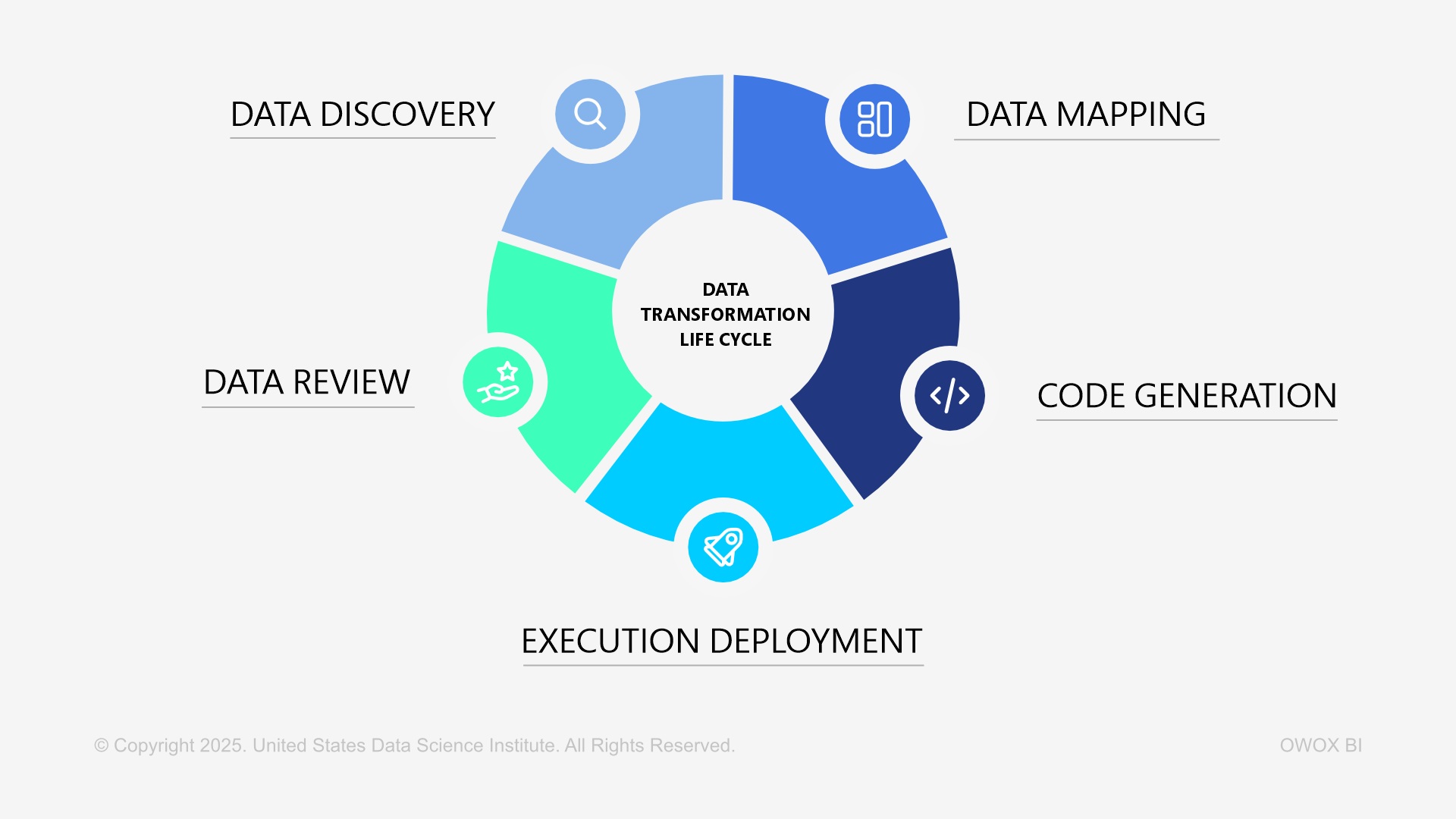

Data analysts, data engineers, and data visualization experts utilize the following steps to perform data transformation:

The Technique Types

Popular Tools

Many of the data transformation tools focus on the process, managing the loops attached in data transformation process. Alteryx Transform, IBM InfoSphere DataStage, Microsoft Azure Data Factory, SAP Data Services, Oracle Cloud Infrastructure GoldenGate, Informatica Intelligent Data Platform, and Talend Open Studio, are some of the popular data transformation tools heavily deployed in the data pipelines.

Checklist to Find the Best Data Transformation Tool Fit

This involves the capability of a tool to analyze data thoroughly to identify its characteristics such as its scale, distribution, and outliers.

Determining the project’s objectives is central and what insights need to be gained from the data. setting expectations is crucial!

Reviewing the analysis or modeling techniques that will be applied to data after transformation helps in identifying data techniques that are compatible with downstream analysis or modeling techniques.

Choose the technique that has a positive impact on the data transformation process.

Test, trial, experiment, and iterate the tool that perfectly fits the block, allows massive improvement in data quality and fulfills the project objectives hassle-free.

The selected tool must support the current data volumes and workflow complexity; and should possess comprehensive and responsive vendor support. Open-source tools should have an active, responsive, and helpful user community.

Having talked about data transformation, its meaning, advantages, techniques, challenges, and toolkit; it is time to stress upon the relevance of trusted data science certifications and courses that can bring all the above-stated competencies and understanding in a structured manner. As the world grows beyond bounds with data becoming humungous, it is imperative to master data transformation processes and techniques to streamline business processes for the greater good. Amplify your data science skills pool now!

This website uses cookies to enhance website functionalities and improve your online experience. By clicking Accept or continue browsing this website, you agree to our use of cookies as outlined in our privacy policy.